Latent Space Analysis

Utilize information learned in the latent space of deep neural networks to extract features and analyze data.

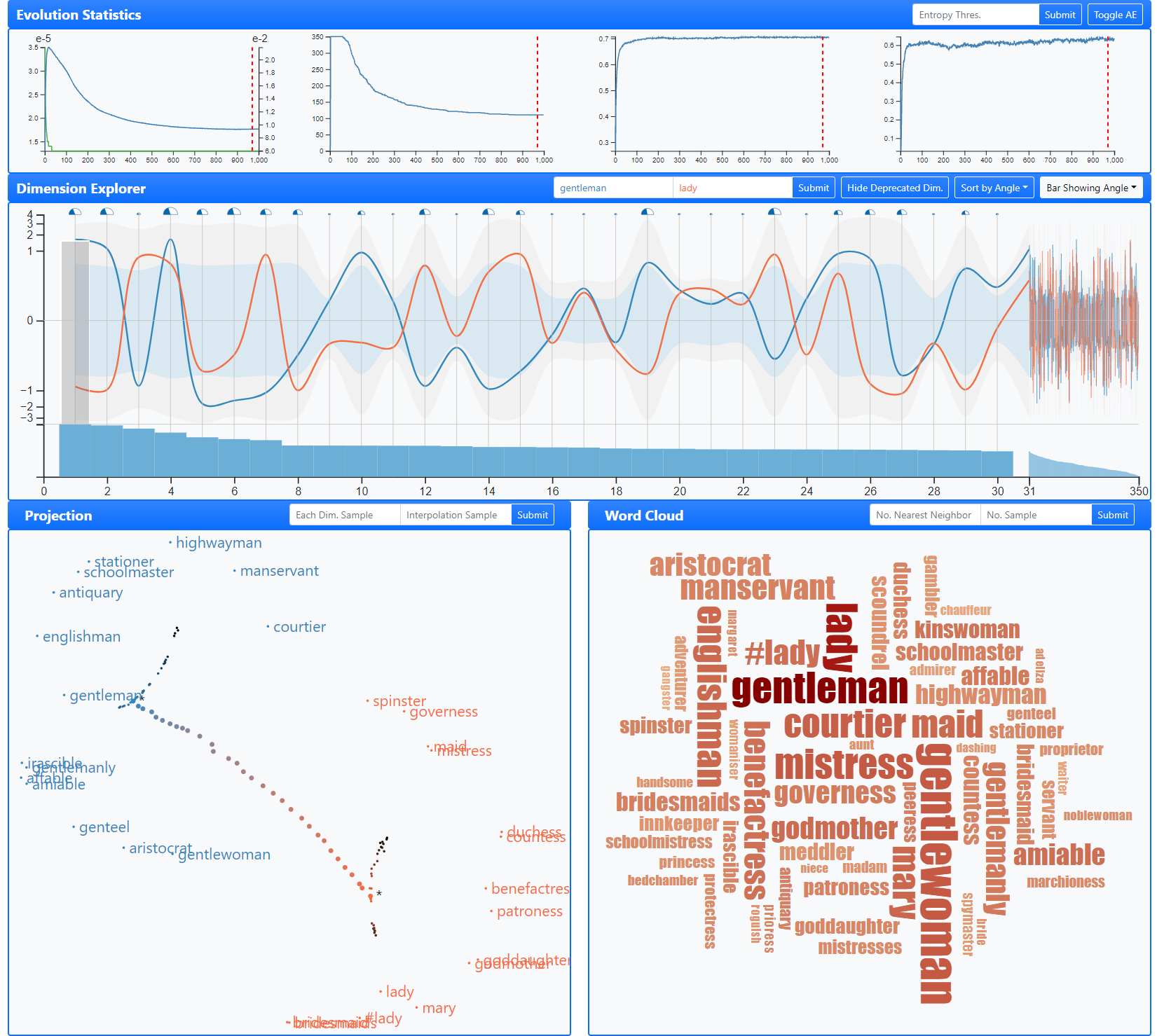

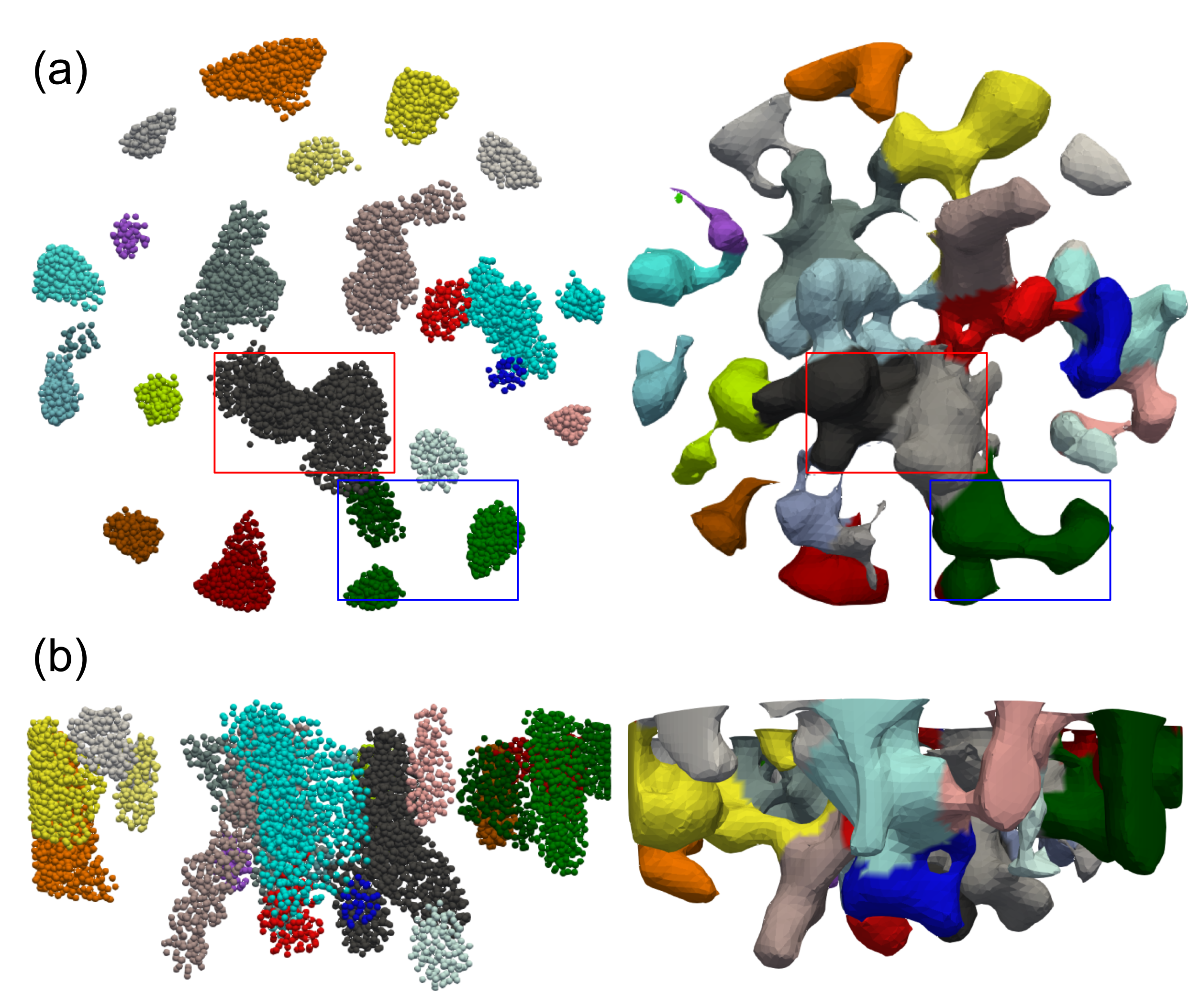

We develop tools that compress and make sense of learned representations so experts can explore data faster with confidence. In Compressing and Interpreting Word Embeddings (Li et al., 2023), we add latent space regularization and interactive semantics probing to shrink vectors while revealing human-readable axes (e.g., sentiment, tense) for transparent NLP analysis. In Local Latent Representation with Geometric Convolution for Particle Data (Li & Shen, 2022), we learn geometry-aware local latents that capture neighborhood structure in particle simulations, enabling feature discovery (shocks, vortices, mixing) without full-resolution passes. In IDLat (Shi et al., 2022), we introduce importance-driven latent generation that allocates capacity where it matters most, speeding up visual exploration and preserving critical phenomena. Together, these works turn black-box embeddings into compact, controllable, and interpretable spaces for both language and scientific data.

References

2023

-

Compressing and interpreting word embeddings with latent space regularization and interactive semantics probingInformation Visualization, 2023

Compressing and interpreting word embeddings with latent space regularization and interactive semantics probingInformation Visualization, 2023

2022

- TVCG

Local latent representation based on geometric convolution for particle data feature explorationIEEE Transactions on Visualization and Computer Graphics, 2022

Local latent representation based on geometric convolution for particle data feature explorationIEEE Transactions on Visualization and Computer Graphics, 2022 - TVCGVDL-Surrogate: A view-dependent latent-based model for parameter space exploration of ensemble simulationsIEEE Transactions on Visualization and Computer Graphics, 2022